The 4 Step Process to Prioritise Your Cost Containment Initiatives

| 7 minute read

You may not know where to start, just that you must act swiftly.

If so, then this guide is for you.

It is famously said that “You can’t shrink your way to greatness”, but with a financial and health crisis, you do need to make cuts to survive.

In the early days of COVID, you raced to complete transformative initiatives to keep the organisation operating as expected. Many digital transformation initiatives became your top priority, helping transform the organisation in a matter of weeks rather than months or years.

However, a new reality has emerged for many: IT spend is too high and unsustainable within our unpredictable lockdown economy. Like many financial and IT leaders, you may be under pressure to get the operating environment ‘back to normal’ and must find a way to continue to deliver without the same level of resources.

Unfortunately, many, now exhausted, IT Leaders don’t want to dismantle the progress made over 2020 but realise that they must deal with the overspending issue in the face of one initiative depending on the other.

The Cost Reduction and Containment Initiatives for Enterprises in 2021

IT Cost containment and reduction initiatives range from small to involving the entire organisation. Easy, near pain free reductions include cancelling unused or underused monthly SaaS subscriptions while more complex, time-consuming initiatives involve code evaluation and shifting workloads to more cost-efficient platforms.

The cost containment and reduction initiatives many leaders are undertaking in 2021 include:

- Evaluating existing projects for Pause, Stop and Continue

- Terminating unused SaaS or cloud services

- Ensuring application code minimizes computing resources

- Shifting workloads or data storage to the most cost-efficient platforms

- Negotiating down contracts

- Consolidating databases and/or enterprise software onto less costly platforms, leading to a significant reduction in software licensing costs and operating costs

- Increasing productivity of assets through increased use or consolidation

- Driving automation across the IT department

- Evaluating the impact of CapEx and OpEx on the IT budget. Leveraging SaaS-like consumption models for capital projects

- Reducing internal service levels

Getting Started: Begin with the View to Strategic Cost Containment

Research shows that organisations that invest strategically during tough times are more likely to emerge as market leaders in the future. Tough times require difficult actions.

All organisations have some easy, tactical opportunities to save budget but this often not enough. Cost-cutting alone without context to the organisational impact is a recipe for disaster (and career limiting!). Frozen or suspended costs may help provide immediate expense relief, but may resurface at the wrong time. Getting it right means making strategic cost decisions.

Cost containment and optimisation initiatives should be sustainable over the short term and long term. Therefore, leaders should ensure decisions are made with a full understanding of the business impact and avoid cuts that simply shift spend — spend that is likely to return in another place or time without any overall gain or benefit to the organisation.

Step 2: Evaluate Cost Decisions with a Cost Optimisation Framework

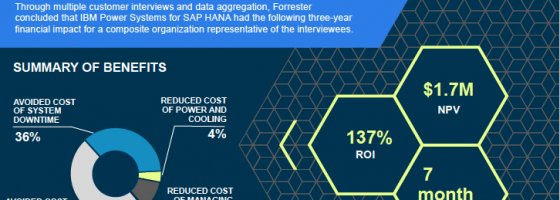

To assess the cost containment, optimisation initiatives and programs to undertake in 2021, TES recommends the use of a cost optimisation framework. The framework balances the cost impact and potential benefits with that of the impact to the business, time-to-value, risk (business and technical) and any required investment.

Not all cost containment initiatives will result in the same benefits. By assessing cost containment initiatives within a framework, a prioritised and optimised list of cost containment initiatives will emerge that will:

a) Meet cost-cutting targets, and

b) Ensure the organisation is well-positioned for better days ahead.

The recommended framework consists of six (6) key areas to analyse and determine your prioritised cost-cutting initiatives:

Potential Financial Benefit

Estimate the financial impact each cost initiative can impact the bottom line.

Ask: How much can be cut from my budget if the initiative is implemented? Is there an effect on cash flow in the short and long term?

Business Impact

The optimal cost reductions are those that occur within the same fiscal period. While long term cost savings can drive the organisation forward, these may not produce much-needed immediate cost savings. Determine what impact an initiative will have on the operations of a specific business unit or function and on your people.

Ask: Will there be an adverse impact on day-to-day activities and operations, such as decreased productivity or product time to market? If the organisation fails to grasp these effects, initiatives may fail.

Time to Value

Whether cost containment and optimisation initiatives are approached via a Waterfall or Agile thinking, the time it will take to realise the cost savings and improve business value needs to be considered. If the cost savings will not be realised until the next fiscal period, then the initiative may not be as valuable as an initiative whose value is delivered instantly, no matter the ‘size of the prize’.

Ask: Can the cost savings be captured and realised within the desired time frame (weeks/months/fiscal year)? What is the best method to measure soft savings with an initiative?

Degree of Organisational Risk

The effectiveness of the cost containment initiative may depend on whether your organisation and people can change and adapt to new processes or structures.

Ask: Can our people ensure the changes are made? Does the organisation possess the capability of adapting and learning to change?

Degree of Technical Risk

This risk resides within the domain of IT leaders. IT leaders must work across the organisation to ensure IT changes can be integrated within the current operations. Delays caused by or attributed to the initiative could result in a loss of service delivery or productivity.

Ask: Can the change undermine the ability of our systems to deliver services?

Investment

Cost optimisation sometimes isn’t about cost reduction; in some cases, it is about sustained improvements in business processes, productivity and time to market. Some initiatives will require an initial investment, that leadership (and/or the executive board) must agree to fund. Present a business case showing the potential business benefits vs. the status quo and the level of investment required.

Ask: Does the initiative require a large, upfront investment before savings can be realised? Can our organisation make an investment at all?

Step 3: Determine Your Optimised Cost Containment List

Each organisation is different in terms of risk appetite, policies and investment considerations in challenging times, just to name a few. Your decision framework should account for these factors.

Start by weighing each of the six (6) assessment areas above, with Potential Financial Benefits and Business Impact weighed as one group, and the other four together within a second. Score the proposed initiative across each of the six areas. Once you determine the score values, calculate a weighted assessment score for the initiative and map to a 3×3 grid. Repeat this for all your considered initiatives. When all the initiatives have been mapped, you can prioritise your list with those high impact, low risk/time to value as first to action.

Need Help with the Assessment? Download our free IT cost containment framework tool to complete your assessment

Step 4: Action the Cost Containment Initiatives and Reduce your IT Spend

With your assessment complete and the cost containment initiatives prioritised, the work to contain, reduce, and change begins.

The strategic assessment outlines those initiatives appropriate for your organisation, balancing potential cost reductions against the net benefits and potential risks.

Putting these initiatives into action to realise the cost savings is when the real work begins. The Enterprise Specialists at TES are well experienced in database consolidations, data centre migrations, staff augmentation, hybrid cloud AI designs, contract renegotiations and code audits for performance productivity – many cost containment projects enterprises are executing in 2021.

Our specialists ensure you capture the anticipated cost reductions. Book a free assessment session today with an Enterprise Specialist to help you develop your executional roadmap to cost containment and reductions.